Implementing Grasp Evaluation Metrics in Simulation

This blog post serves as documentation of the process of implementing grasp evaluation metrics in the MuJoCo simulation. To provide a complete record, we will first describe our initial approach, which did not yield successful results, before moving on to the final implementation that worked.

The metrics are based on concepts discussed in our previous post. However, for the purposes of this post, we only need to focus on the key inputs required by most of these grasp evaluation metrics:

- Contact Normals

- Contact Points

- Object’s Center of Mass

- Forces Applied

Initial Approach: Leveraging GraspIt!

Our first attempt at implementing grasp evaluation metrics involved using the GraspIt! simulator. Since it is a specialized grasping simulator, we had high hopes that it would facilitate our task.

However, given that we already had an established infrastructure around MuJoCo, we initially did not consider switching simulators. Instead, our plan was to extract the relevant metric computation code from GraspIt! and integrate it into our codebase. Unfortunately, this approach proved unsuccessful due to two key issues:

- GraspIt! is heavily based on Object-Oriented Programming (OOP) in C++, making it difficult to isolate and extract the metric computation logic.

- The code lacked a clear separation between simulation and metric calculation, making refactoring impractical.

Running GraspIt! for Evaluation

Since extracting the code was not feasible, we decided to run the simulator and explore its functionalities to gain insights into its grasp evaluation capabilities. However, we soon discovered that GraspIt! is somewhat outdated.

Despite its last official release being in 2021, several users in the GitHub Issues section reported instability and recommended using the 2017 release. Unfortunately, that version was only compatible with Ubuntu 18, forcing us to create a Docker image to run the simulator in a containerized environment.

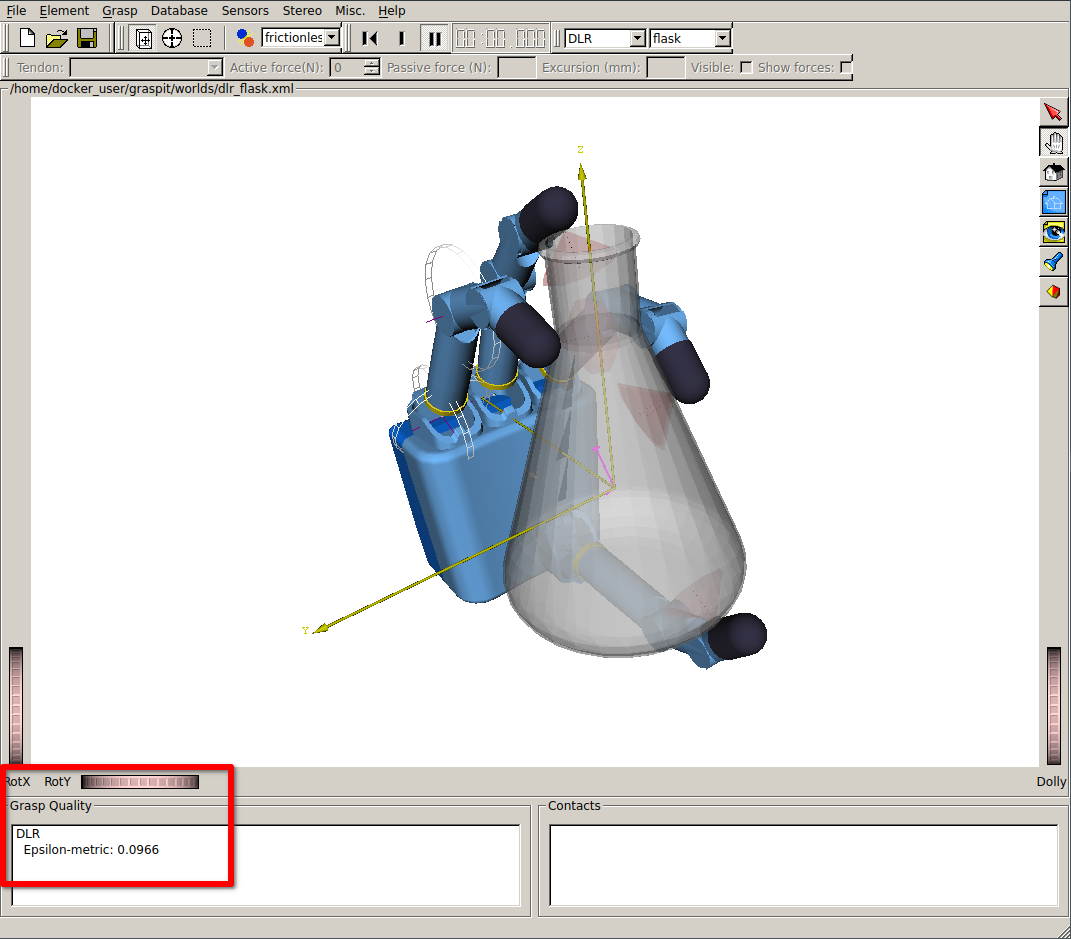

After completing the tutorial, we successfully executed a grasp in a pre-configured environment and computed a grasp metric for a given pose (Figure 1).

Fig 1: GraspIt! Simulator running the dlr_flask environment. The $\epsilon$-metric is displayed in the bottom left corner.

Fig 1: GraspIt! Simulator running the dlr_flask environment. The $\epsilon$-metric is displayed in the bottom left corner.

Challenges with GraspIt!

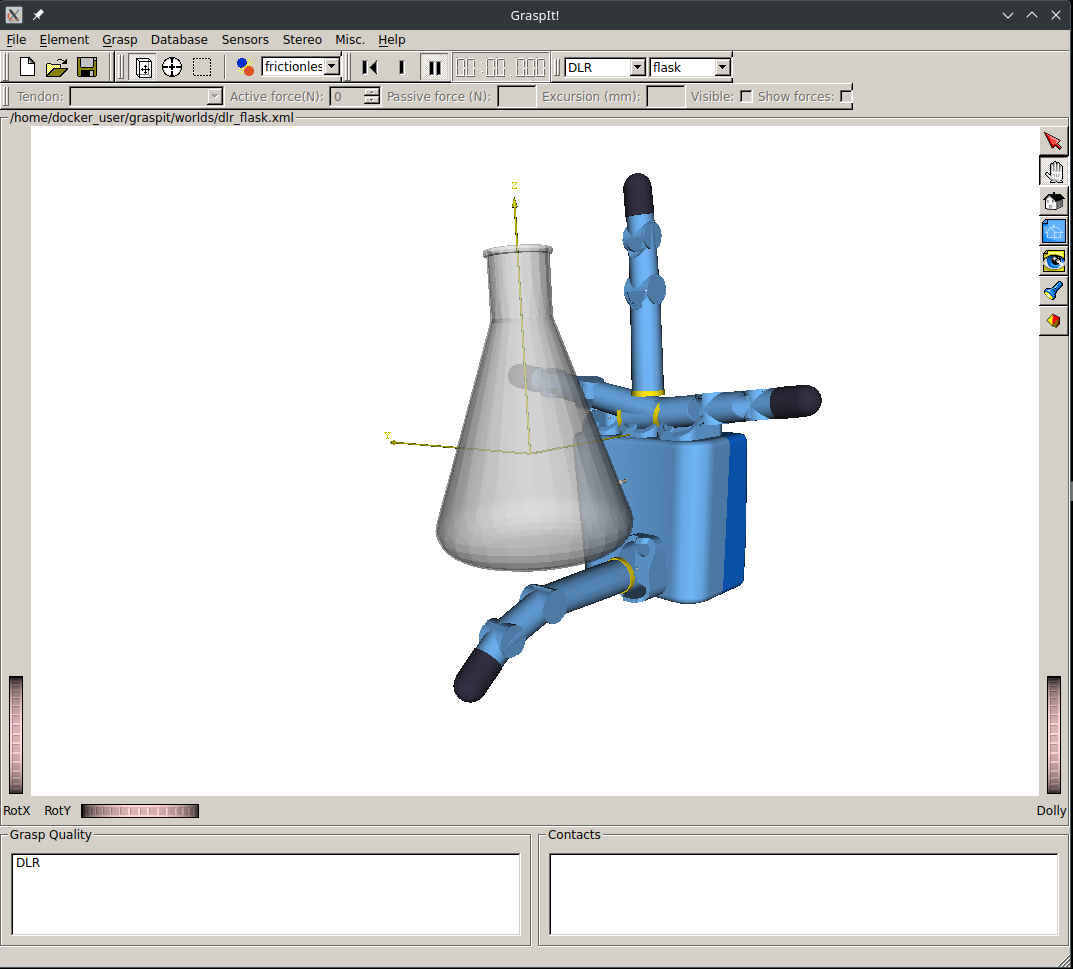

While the user interface was intuitive, we quickly realized that switching to GraspIt! was not a viable option. The simulator frequently crashed, even in simple environments where dynamics were not expected to be an issue (Figure 2). More concerningly, it also broke in more complex setups involving robotic arms and tables.

Fig 2: GraspIt! Simulator with the dlr_flask environment and broken physics

Fig 2: GraspIt! Simulator with the dlr_flask environment and broken physics

This instability, combined with lack of ongoing community support, significantly reduced the practicality of relying on GraspIt!. While a dedicated grasping simulator is an exciting concept, these limitations made it unsuitable for a single PhD student without external maintenance support.

Extracting Data from GraspIt!

Despite its issues, GraspIt! could still be useful for validating our own metric implementation. Since grasp quality evaluation is deterministic, given:

- Contact Normals

- Contact Points

- Object’s Center of Mass

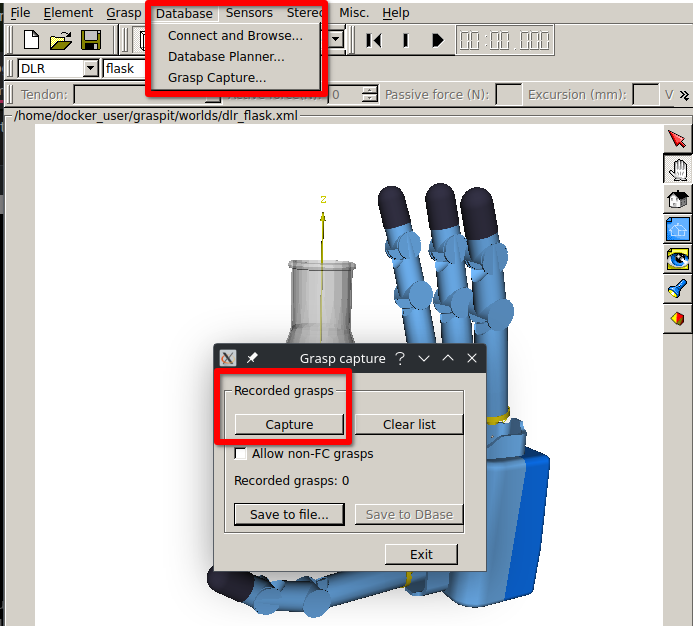

We expected the computed metric values to match. We explored the grasp storage feature (Figure 3), hoping to retrieve relevant grasp information.

Fig 3: Grasp recording interface in GraspIt!

Fig 3: Grasp recording interface in GraspIt!

However, after testing the grasp storage feature, we found that it only saved joint positions of the robotic hand and object positions, rather than the detailed contact information required for our metrics.

Exploring External Grasp Databases

Given that GraspIt! could connect to external databases, we explored potential integrations. One promising resource was the Columbia Grasp Database (CGD), which contains a collection of grasping data for various hands and objects, all evaluated using the $\epsilon$-metric.

However, CGD did not store contact normals. Instead, it provided:

- Contact points

- Hand and object IDs

By loading this information into the GraspIt! physics engine, the simulator could compute the contact normals dynamically. However, we were unable to extract them, leading us back to the same issue.

At this point, we identified a more effective solution and moved on from GraspIt!.

Integrating Grasping Metrics from Dex-Net

Dex-Netis a project from AUTOLAB Berkley that provides code, datasets, and metric implementations for parallel-jaw grasping. The source code is available on their GitHub repository. Although the repository has not been updated in seven years, the entire implementation is in Python, making integration straightforward. Additionally, their metric computation and physics simulation code are relatively independent, requiring minimal effort to adapt to our codebase.

License Considerations

The Dex-Net license permits research use, provided that any work derived from it properly cites the original source. This should not pose an issue for future publications, but it is something to remember. Software licensing can be complex, but the refactoring I performed to integrate the code does not seem to be transformative enough to qualify as “new code.”

Applicability Beyond Parallel-Jaw Grasping

Despite Dex-Net being designed for parallel-jaw grasping, the grasping metrics it provides are agnostic to the gripper, hand, or object being grasped. As a result, the code can be seamlessly applied to anthropomorphic grasping as well. While Dex-Net implements the most important grasping metrics, we found it necessary to develop additional ones to better fit our needs.

Implemented Grasping Metrics

In the end, we integrated the following grasping metrics into our codebase:

- Force-Based Metrics

- Force Closure: Determines whether a grasp can immobilize the object without external force. This is a binary metric (true/false).

- Partial Force Closure: Evaluates whether the grasp can resist a specific wrench. This is also a binary metric.

- Wrench Resistance: Measures the inverse norm of the contact forces required to resist a target wrench. Minimizing this value optimizes grasp efficiency by reducing the required force.

- Min Singular Value: Represents the weakest direction in which the grasp can resist external forces. A higher value indicates a more stable grasp.

- Volume of the Ellipsoid in the Wrench Space: Measures the grasp’s ability to resist external forces and torques by computing the ellipsoid volume in the wrench space. This metric captures the “average” strength across the six principal directions.

- Grasp Isotropy: Assesses the uniformity of force transmission, where higher values indicate a balanced and dexterous grasp. A zero value implies instability, similar to the Min Singular Value.

- Volume of the Grasp Wrench Space Convex Hull: Estimates the grasp’s ability to generate force and torque by computing the volume of the convex hull formed by contact forces. This correlates with dexterity, and low GWS volume is typically associated with low grasp isotropy.

- Ferrari & Canny’s Epsilon Metric: Evaluates the worst-case disturbance the grasp can resist by computing the minimum force required to break the grasp. Higher values indicate a stronger grasp.

- Geometric-Based Metric

- Centroid Distance: Computes the distance between the object’s center of mass and the centroid of the contact points. A lower distance suggests a more stable grasp.

- Joint-Based Metrics

- Joint Angle Deviation: Measures how much the robotic hand’s joint angles deviate from their neutral positions, ensuring comfort and stability.

- Max Joint Angle Deviation: Identifies the joint with the highest deviation, which may indicate stress on the robotic hand’s structure.

MuJoCo: Extracting the Required Inputs

The final step in the pipeline was ensuring that we could access the necessary inputs for the grasping metrics within MuJoCo. Since joint states are already used in the RL cycle, we had direct access to joint-based metrics. However, additional work was required to extract contact forces and contact points.

Without diving too deep into implementation details, this information was retrieved from:

- The variable

data.contact, which stores contact data in MuJoCo. - The method

mujoco.mj_contactForce(), which extracts the forces applied at each contact point.

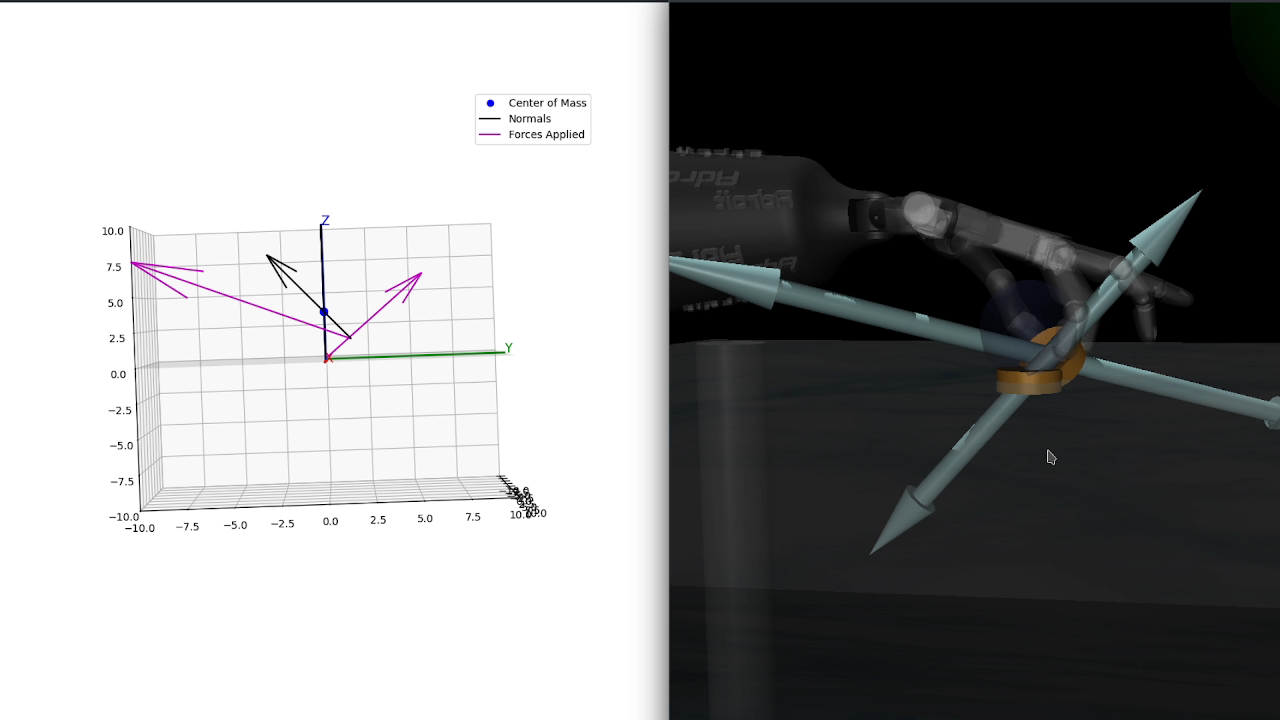

To validate that the extracted data was correct, we developed a visual animation. The following video presents this visualization and verification process:

Testing the Grasping metrics

With the metric extraction working, the next step was to test these metrics on a trained agent. The following video showcases two agents performing a grasp and relocation task with a sphere. These agents were trained using:

- Proximal Policy Optimization (PPO)

- Soft Actor-Critic (SAC)

In the recorded runs, the PPO agent achieved a final reward of 5500, while the SAC agent reached 4500. The reward function penalized distance to the ball, provided a bonus for being inside the green circle, and rewarded fingertip contact with the object.

To ensure a stable and secure grasp, we extracted the grasping metrics at the final time step of the episode, where both agents had already reached their target positions. This setup forced the agents to maintain a secure grasp even after reaching their destination.

The following tables present the computed grasping metrics for both agents. In each table, the best value for each metric is highlighted.

PPO Grasping Metrics

| Method | Quality | Time (s) |

|---|---|---|

| force_closure | 1.0000 | 0.047639 |

| partial_closure | 1.0000 | 0.097560 |

| wrench_resistance | 0.5544 | 0.093842 |

| min_singular | 0.1356 | 0.099701 |

| wrench_volume | 0.9423 | 0.053622 |

| grasp_isotropy | 0.0201 | 0.046712 |

| wrench_space_volume | 0.0003 | 0.065498 |

| ferrari_canny_L1 - 8 | 0.0253 | 2.953378 |

| ferrari_canny_L1 - 4 | 0.0226 | 0.800319 |

| centroid_distance | 0.0035 | 0.000147 |

| joint_angle_dev | 3.0135 | 0.000185 |

| max_joint_angle_dev | 0.5144 | 0.000211 |

SAC Grasping Metrics

| Method | Quality | Time (s) |

|---|---|---|

| force_closure | 1.0000 | 0.053421 |

| partial_closure | 1.0000 | 0.096527 |

| wrench_resistance | 0.4541 | 0.098819 |

| min_singular | 0.1431 | 0.110460 |

| wrench_volume | 1.4599 | 0.051930 |

| grasp_isotropy | 0.0194 | 0.051183 |

| wrench_space_volume | 0.0003 | 0.068499 |

| ferrari_canny_L1 - 8 | 0.0231 | 3.245601 |

| ferrari_canny_L1 - 4 | 0.0207 | 0.913095 |

| centroid_distance | 0.0082 | 0.000135 |

| joint_angle_dev | 1.7061 | 0.000186 |

| max_joint_angle_dev | 0.4884 | 0.000210 |

Analyzing the Results

Visually, PPO appeared to have a more stable grasp, as SAC did not fully utilize the thumb. However, the grasping metrics tell a different story:

- SAC outperforms PPO in the

min_singularvalue andwrench ellipsoid volume, suggesting a more robust grasp capable of resisting external disturbances. - PPO performs slightly better in grasp isotropy and the $\epsilon$-metric (Ferrari-Canny L1), though the values are very close to SAC.

- SAC has better joint-based metrics, which are used to evaluate the human-likeness of the grasp.

Therefore, although PPO achieved a higher reward, analyzing the specific grasping metrics reveals a more nuanced comparison, making it unclear which method truly outperformed the other.

If the focus is on general wrench resistance and human-likeness, SAC appears to be the better option. However, PPO demonstrated slightly better grasp isotropy and force distribution, which could be beneficial in certain scenarios.

⚠️ Note: The wrench resistance metric does not hold much significance in this instance, as no specific wrench was predefined for resistance.

Understanding the Two Ferrari-Canny L1 Metrics

You may have noticed that the tables include two Ferrari-Canny L1 ($\epsilon$-metric) values. The number associated with each entry (e.g., 4 or 8) represents the number of edges used to approximate the friction cone.

- Fewer edges result in a more conservative estimate (underestimating the cone size) but lead to faster computation.

- More edges improve accuracy but at the cost of exponentially increasing computation time.

This trade-off could be relevant if we aim to compute these metrics online during training.

Conclusion

This post documented the implementation of grasping metrics in MuJoCo and applied the concepts introduced in the previous post.

Although we initially explored the GraspIt! simulator, the lack of community support and frequent instability made it unviable. Instead, we found a better alternative in Dex-Net, which provided fully implemented grasping metrics in Python.

After integrating these metrics into our codebase, we tested them on two trained agents (PPO and SAC). The results demonstrated the strengths and weaknesses of each policy, providing valuable insight into the grasp stability and robustness of each agent.