Vanishing Gradients in Deep Reinforcement Learning

In this post, we’ll ponder on one of the most prevalent problems in Deep Learning (DL), shaping the field by making it taking it into account when proposing new architectures or learning methods. Given that Deep Reinforcement Learning (DRL) also uses Deep Neural Networks, it can be a target for Vanishing Gradients problems.

We’ll start with a small theoretical contextualization on what is vanishing gradients, and what can cause them. This analysis will have some part more generic about DL, about we talk about specifics of DRL also.

After that, we will propose a testing framework to identify which solutions of the literature best fit our specific problem. We are using the Soft Actor-Critic (SAC) algorithm in the Adroit Environment.

Motivation

Deep Reinforcement Learning (DRL) is one of the most variance-prone frameworks in machine learning when it comes to algorithmic performance. This variability is even more pronounced in off-policy algorithms such as Soft Actor-Critic (SAC). The root cause lies in the non-stationary and non-independent, identically distributed (non-i.i.d.) nature of the training data, which evolves alongside the policy itself.

As a consequence, researchers face significant challenges when comparing the performance of different DRL algorithms. To account for this inherent stochasticity, it is common practice to evaluate each algorithm over multiple independent trials using different random seeds. The goal is to characterize performance through a distribution rather than a single run.

However, a critical issue arises when some trials fail to converge, even if such failures occur in only a small fraction of the total runs. In such cases, the overall performance distribution can become bimodal—effectively mixing successful and failed runs. This leads to inflated variance, which negatively impacts the statistical power of hypothesis testing.

Statistical tests like the Welch’s t-test rely on the assumption that the two samples come from distinct but well-structured distributions. When the outcome includes occasional divergence or collapse in learning, the overlap between distributions increases, making it difficult to demonstrate statistically significant differences, even when one algorithm consistently outperforms the other in most cases.

To illustrate this, consider a case study comparing two slightly different variants of the SAC algorithm (Fig. 1). As shown in the success plots, one variant appears to consistently outperform the other in most trials.

Fig 1:Evaluated success rate of two different SAC variants. Both plots were made averaging 5 runs

However, despite the clear visual trend, statistical analysis fails to reject the null hypothesis of no significant difference between the two methods (see Table 1). Notably, while the performance gap appears evident with as few as five trials, formal validation of the results would require at least 24 independent runs to achieve sufficient statistical power—an expensive demand in terms of computational resources.

It is important to emphasize that failing to reject the null hypothesis does not imply that the two distributions are equal. In our analysis, the computed statistical power is only 21.3%, which means there is a 78.7% chance of committing a Type II error—failing to detect a real effect. In other words, there’s a high likelihood that Algorithm 2 does in fact outperform Algorithm 1, but the variance introduced by the failed runs masks this difference and prevents us from confirming it with statistical significance.

| Exp 1 | Exp 2 | Relative Difference | Number of Samples | Required Samples | power | p_value | significance |

|---|---|---|---|---|---|---|---|

| Alg2 | Alg1 | 0.832 | 5 | 24 | 0.213 | 0.137 | False |

Table 1: Welch’s t-test comparing generic algorithm 1 and 2.

These concepts deserve a dedicated post of their own, but it’s important to keep in mind that the required number of samples for a statistical test depends on several key factors:

- The desired significance level (commonly $p<0.05$)

- The required statistical power (typically set to 0.8)

- The relative difference between the means of the two distributions being compared

The relative difference can be formally expressed as:

\[\begin{equation} \begin{split} \epsilon = \frac{|\mu_1 - \mu_2|}{\sigma_{\text{pool}}} \\ \sigma_{\text{pool}} = \sqrt{\frac{\sigma_1^2+\sigma_2^2}{2}} \end{split} \end{equation}\]where $\mu_1$ and $\mu_2$ being the means of the two groups, and $\sigma_1$ and $\sigma_2$ their respective standard deviations.

Vanishing Gradients

In a neural network, the gradients of the parameters with respect to the loss function are computed using the chain rule. This implies that the gradient of an early-layer parameter is the product of several partial derivatives from the layers that follow. Mathematically, for a scalar loss $\mathcal{L}$, and a parameter $\theta_i$ in the network, we have:

\[\begin{equation} \frac{\partial\mathcal{L}}{\partial\theta_i} = \frac{\partial\mathcal{L}}{\partial h_n}.\frac{\partial h_n}{\partial h_{n-1}} \dots \frac{\partial h_{i+1}}{\partial \theta_i} \end{equation}\]If each of these derivatives is smaller than 1, their product can shrink exponentially with the number of layers. This leads to vanishing gradients, where the gradient approaches zero and parameters in early layers are no longer updated effectively.

This issue is particularly relevant in DRL, especially when the reward signal is weak. In algorithms like SAC, the actor receives gradients only through the critic. If the critic’s gradients are small, the actor’s gradient updates can vanish as well.

One contributing factor to small gradients in the critic is a low reward variance. Since the critic learns to predict expected returns, if the reward signal has little variability, the value function becomes almost flat, leading to small derivatives. In other words, if the reward $r_t$ has a low variance:

\[\begin{equation} \text{Var}(r_t) \approx 0 \rightarrow \Delta_\theta V(s_t) \approx 0 \end{equation}\]Monitoring the variance of the reward signal during training can therefore provide valuable insight into learning stagnation.

Action Clipping

Even though our network does not apply a tanh activation to squash actions into $[−1,1]$, the environment clamps the actions within this range before scaling them to the actual joint limits. As a result, action values outside $[−1,1]$ are clipped and do not influence the reward, which leads to flat gradients with respect to those actions. This can reduce the reward variance further and exacerbate the vanishing gradient problem.

The Dying ReLU Problem

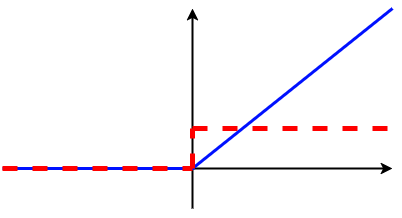

Another source of gradient vanishing is the Dying ReLU phenomenon. The ReLU (Rectified Linear Unit) activation function is defined as:

\[\begin{equation} f(x) = \max(0,x) \end{equation}\]This function outputs zero for all negative inputs and has a derivative of zero in that region. If a neuron’s input is negative, the output is zero, and no gradient flows through that neuron. If many neurons fall into this regime—especially in deep layers—the network can suffer from gradient starvation. This problem is often triggered by:

- High learning rates, which can push weights into large negative values.

- Large negative biases, which systematically shift activations into the non-active (zero-output) region.

Once a neuron consistently outputs zero, it may never recover—this is the essence of the “dying” behavior.

To mitigate this, consider using ReLU variants that allow small gradients in the negative range. One popular option is Leaky ReLU, defined as:

\[\begin{equation} f(x) = \begin{cases} x \text{ if } x > 0 \\ \alpha x \text{ if } x \le 0 \end{cases} \end{equation}\]where $\alpha$ is a small positive constant (e.g., 0.01). This modification helps maintain some gradient flow even when activations are negative.

Weight Initialization

The choice of weight initialization plays a critical role in how gradients propagate through the network. PyTorch uses Xavier initialization (also known as Glorot initialization) by default, which is optimized for sigmoid and tanh activations. However, for ReLU and its variants, He initialization (also called Kaiming initialization) is more appropriate. It scales weights according to:

\[\begin{equation} \text{Var}(w) = \frac{2}{n_{\text{in}}} \end{equation}\]where n_{\text{in}} is the number of input connections to the neuron. This helps preserve the variance of activations across layers, particularly when using ReLU-based nonlinearities.

Experimental Setup

We evaluated several variants of the SAC algorithm in the Adroit Relocate environment. Below, we present the set of hyperparameters used in the baseline configuration, which serves as the reference point for all subsequent experiments:

- Learning Rate: 0.001

- Replay Buffer Size: 1,000,000

- Batch Size: 256

- Target Update Coefficient ($\tau$): 0.2

- Discount Factor ($\gamma$): 0.98

- Entropy Coefficient: 0.01

- Critic Network Architecture: [512, 512, 256]

- Actor Network Architecture: [512, 512, 256]

For all experiments described below, only a single hyperparameter was varied at a time, while the rest remained identical to the baseline. This controlled setup allows us to isolate the effect of each change.

Based on our theoretical insights, we investigated the following five modifications to the baseline SAC:

- Higher Entropy Coefficient: To amplify the influence of the reward signal and promote exploration, we increased the entropy coefficient to 0.1.

- Leaky ReLU Activation: All ReLU activations in both the actor and critic networks were replaced with Leaky ReLU, aiming to mitigate the “dying ReLU” problem and preserve gradient flow.

- Lower Learning Rate: The learning rate was reduced to 0.0003 to explore whether smaller updates help stabilize training and prevent gradient collapse.

- He Initialization: We replaced the default Xavier initialization with He initialization in both actor and critic networks, better aligning with ReLU-based architectures.

- Smaller Weights in the Actor’s Final Layer: To encourage action outputs to remain within the meaningful $[−1,1]$ range before environment scaling, the final layer of the actor was initialized with weights sampled from a uniform distribution $\text{ }\mathcal{U}(-10^{-3}, 10^{-3})$.

From prior experiments, we observed that if gradient collapse occurred (i.e., the actor’s gradients vanished), it typically happened within the first 100,000 training steps. To ensure comprehensive coverage, each training run was extended to 300,000 steps.

Each experimental configuration was run with 15 independent seeds, resulting in a total of 90 runs across all variants. These experiments were executed over 27 compute days, primarily during a single weekend window.

Statistical Analysis

We begin our analysis by examining the distribution of gradients in the actor network. Based on prior experiments, we identified that vanishing gradients primarily affected the actor, while exploding gradients were never observed. As a result, larger gradient magnitudes in the actor network are generally indicative of healthier learning dynamics.

Comparison between Baseline and Single Solutions

Figure 2 presents strip plots of actor gradient magnitudes for all experimental conditions. These plots display the interquartile range (25th–75th percentiles) along with the median, offering a clear view of both the spread and skewness of each distribution. This type of visualization is particularly effective for identifying asymmetries, outliers, and the concentration of values, all of which help assess the robustness and effectiveness of each modification to the baseline.

Fig 2: Strip plot of actor network gradients at the final training steps. The plot illustrates the distribution of gradient magnitudes between the 25th and 75th percentiles, along with the median value.

As observed in Figure 2, the baseline configuration exhibits a median gradient value near zero, which aligns with the high number of runs that suffered from vanishing gradients. Analyzing the distribution across all methods, we find that each proposed modification improves upon the baseline, as indicated by a noticeable increase in median gradient magnitude.

However, many of these methods still exhibit high variance, suggesting that a subset of runs may still be collapsing, however less frequently. Among all tested configurations, the Higher Entropy Coefficient and the Smaller Last Layer Weights appear to be the most stable: they not only achieve higher median gradient values, but also demonstrate reduced variance, indicating more consistent learning across seeds.

| Exp 1 | Exp 2 | Value 1 | Value 2 | Relative Difference | Number of Samples | Required Samples | power | t_stat | p_value | significance |

|---|---|---|---|---|---|---|---|---|---|---|

| Larger Entropy | Baseline | 13.596 +- 2.655 | 5.512 +- 6.977 | 1.531 | 15 | 9 | 0.977 | 4.052 | 3.751e-04 | True |

| He-norm Initialization | Baseline | 8.143 +- 6.359 | 5.512 +- 6.977 | 0.394 | 15 | 102 | 0.181 | 1.043 | 0.153 | False |

| Leaky ReLU | Baseline | 7.787 +- 5.751 | 5.512 +- 6.977 | 0.356 | 15 | 125 | 0.156 | 0.942 | 0.177 | False |

| Lower Lr | Baseline | 8.097 +- 5.992 | 5.512 +- 6.977 | 0.398 | 15 | 101 | 0.183 | 1.052 | 0.151 | False |

| Reduced Last Layer | Baseline | 10.881 +- 6.061 | 5.512 +- 6.977 | 0.822 | 15 | 25 | 0.584 | 2.174 | 0.0192 | True |

Table 2: Welch’s t-test comparing every experiment with the baseline

As shown in the statistical analysis, only the Higher Entropy Coefficient and the Smaller Last Layer Weights configurations exhibit statistically significant improvements in mitigating vanishing gradients. It is worth noting that these two methods are the ones specifically tailored to reinforcement learning: one enhances the reward signal, and the other constrains action outputs to lie within a meaningful range.

However, the fact that the remaining methods did not pass the statistical significance threshold does not necessarily imply they are ineffective. In fact, these methods showed low statistical power, suggesting that the lack of significance may be due to insufficient sample size rather than the absence of a real effect. Nonetheless, since we are unable to reliably confirm their effectiveness within our current computational budget, it is practical to discard them for this use case.

Interestingly, the two methods that most effectively maintain gradient flow in the actor network are also the ones that yield the highest cumulative episodic rewards, as illustrated in Figure 3. However, due to the high variance in reward trajectories, especially in the early stages of training, a robust statistical comparison of performance was not feasible. Such variance is expected in DRL, particularly when evaluating early training dynamics, where exploration plays a significant role.

Fig 3: Strip plot of cumulative reward at test time. The plot illustrates the distribution of cumulative reward between the 25th and 75th percentiles, along with the median value.

Comparison between All Methods and Best Methods

To assess whether discarding the less effective methods causes us to miss any potential benefits, we conducted an additional experiment combining the two best-performing methods—Higher Entropy Coefficient and Smaller Last Layer Weights—and compared this configuration against a combination of all five proposed modifications. Each configuration was evaluated over 15 independent trials, and results were also compared against both the baseline and the individual best methods.

Fig 4: Strip plot of actor network gradients at the final training steps. The plot illustrates the distribution of gradient magnitudes between the 25th and 75th percentiles, along with the median value.

As illustrated in Figure 4, combining only the two best-performing methods—Higher Entropy Coefficient and Smaller Last Layer Weights—yields superior performance in mitigating vanishing gradients compared to combining all five modifications. Remarkably, applying either of the two best methods individually also outperforms the full combination of all techniques. This suggests that interactions between multiple changes may introduce unintended side effects or diminishing returns, or there is a hyperparameter that nullifies the advantages of the others.

Nevertheless, it is worth emphasizing that all configurations in Figure 4 show improved performance relative to the baseline, indicating that each method contributes some degree of benefit—even if not all combinations are statistically significant or practically optimal when used together.

| Exp 1 | Exp 2 | Value 1 | Value 2 | Relative Difference | Number of Samples | Required Samples | power | t_stat | p_value | significance |

|---|---|---|---|---|---|---|---|---|---|---|

| All methods | Baseline | 9.436 +- 2.976 | 5.512 +- 6.977 | 0.732 | 15 | 31 | 0.477 | 1.936 | 0.034 | True |

| Best methods | All methods | 13.035 +- 4.963 | 9.436 +- 2.976 | 0.88 | 15 | 22 | 0.636 | 2.327 | 0.0146 | True |

| Larger Entropy | All methods | 13.596 +- 2.655 | 9.436 +- 2.976 | 1.475 | 15 | 9 | 0.974 | 3.903 | 2.773e-04 | True |

| Reduced Last Layer | All methods | 10.881 +- 6.061 | 9.436 +- 2.976 | 0.303 | 15 | 173 | 0.124 | 0.801 | 0.216 | False |

| Best methods | Baseline | 13.035 +- 4.963 | 5.512 +- 6.977 | 1.243 | 15 | 12 | 0.905 | 3.288 | 0.00148 | True |

Table 3: Welch’s t-test comparing the best combination of hyperparameters with the baseline.

Learning Rate and Leaky ReLU

Although we did not achieve statistical significance to validate the benefits of Leaky ReLU (the third-best method in the strip plot), nor to conclusively discard Lower Learning Rate and He Initialization (the worst-performing methods in the strip plot), we conducted one final set of experiments to gain further insight into the impact of these hyperparameters.

- No Lower Learning Rate: All methods are applied except the reduced learning rate. The goal is to determine whether the lower learning rate was responsible for the observed performance drop in the All Methods configuration compared to the Best Methods.

- No He Initialization: All methods are applied except He initialization, reverting to Xavier initialization. This test aims to evaluate whether He initialization negatively influenced performance when combined with other methods.

- Best Methods + Leaky ReLU: Combines the two best methods—Reduced Last Layer and Larger Entropy—with Leaky ReLU, to assess whether this third-best method adds further benefit when paired with the strongest configuration.

The results of these experiments are shown in Figure 5, where we compare the new configurations against the Baseline, All Methods, and Best Methods, focusing on the actor gradient magnitudes as a key indicator of learning stability and effectiveness.

Fig 5: Strip plot of actor network gradients at the final training steps. The plot illustrates the distribution of gradient magnitudes between the 25th and 75th percentiles, along with the median value.

As shown in the strip plot, the most likely detrimental factor among the tested methodologies appears to be He initialization. This conclusion is supported by the observation that the No He experiment—combining all methods except He initialization—produces actor gradients very similar to the Best Methods configuration. In contrast, the No Learning Rate (No LR) experiment, which includes He initialization, is the only one not significantly better than the baseline, as confirmed in Table 4.

| Experiment 1 | Experiment 2 | Value 1 | Value 2 | Relative Difference | Number of Samples | sample_size | power | t_stat | p_value | significance |

|---|---|---|---|---|---|---|---|---|---|---|

| All methods | Baseline | 9.436 +- 2.976 | 5.512 +- 6.977 | 0.732 | 15 | 31 | 0.477 | 1.936 | 0.034 | True |

| Best methods | All methods | 13.035 +- 4.963 | 9.436 +- 2.976 | 0.88 | 15 | 22 | 0.636 | 2.327 | 0.0146 | True |

| Best methods | Baseline | 13.035 +- 4.963 | 5.512 +- 6.977 | 1.243 | 15 | 12 | 0.905 | 3.288 | 0.00148 | True |

| Best methods + LReLU | Baseline | 11.79 +- 3.393 | 5.512 +- 6.977 | 1.144 | 10 | 14 | 0.768 | 2.879 | 0.00441 | True |

| No He | Baseline | 10.245 +- 4.192 | 5.512 +- 6.977 | 0.822 | 10 | 25 | 0.496 | 2.031 | 0.027 | True |

| No Lr | Baseline | 6.839 +- 6.681 | 5.512 +- 6.977 | 0.194 | 10 | 417 | 0.0752 | 0.457 | 0.326 | False |

Table 4: Welch’s t-test comparing the best combination of hyperparameters with the baseline.

That said, while the lower learning rate does appear to slightly reduce actor gradient magnitudes, it does not seem to be the main contributor to vanishing gradients—especially when combined with the best-performing methods. It may introduce mild degradation, but not enough to invalidate the configuration.

Additionally, incorporating Leaky ReLU into the Best Methods setup does not significantly improve actor gradient magnitudes. However, it slightly reduces variance, suggesting it may offer more stable behavior across runs, even if the overall impact on learning strength is modest.

Proposed Configuration

Based on the statistical analysis—albeit somewhat limited by computational constraints—we recommend that all subsequent RL experiments adopt the following configuration: Reduced Last Layer, Larger Entropy, and Leaky ReLU.

The rationale for the first two is straightforward: both demonstrated clear benefits in mitigating vanishing gradients and promoting stable learning. As for Leaky ReLU, although it did not produce significant improvements nor detriments in gradient magnitude, it introduces a non-zero gradient in the negative activation range, which could be beneficial in RL contexts. Specifically, since the actor must output actions constrained to $[−1,1]$ and the critic may need to model negative values, allowing some gradient flow in negative activations may help prevent potential optimization issues down the line.

Regarding the learning rate, we will maintain the current baseline value for now, as lowering it did not provide clear benefits. However, we consider it safe to decrease the learning rate in future experiments if the specific use case suggests that smaller updates could improve stability or performance.

Reward Variance and Gradients

At the beginning of this post, we suggested a possible correlation between reward variance and the magnitude of network gradients. Through our experiments, we empirically observed that reward variance is a strong indicator of whether the reinforcement learning agent is effectively learning. In particular, when the variance is close to zero—meaning the agent consistently receives the same reward—it becomes impossible for the agent to distinguish between better and worse actions. As a result, learning stalls.

This observation highlights a key advantage of the exploration bonus introduced by the entropy coefficient in SAC. By encouraging exploration, the entropy term increases the variability of the reward signal, which in turn promotes gradient flow and kick-starts the learning process, especially in the early stages.

To support this analysis, we present three plots that provide complementary views of the learning dynamics: reward variance, actor gradient magnitudes, and critic gradient magnitudes.

Fig 5: Reward variance throughout the train averaged for the 15 trial of the best methods.

Fig 5: Reward variance throughout the train averaged for the 15 trial of the best methods.

As shown in the plots, the reward variance and the critic’s gradient magnitudes exhibit a similar slightly exponential growth pattern, suggesting a potential correlation between them. Notably, the critic’s gradients display an early peak at the beginning of training, which is not reflected in the reward variance curve. This initial spike is likely due to the effect of the entropy bonus, which injects exploration-driven variability into the critic’s objective before the environment begins providing meaningful reward feedback.

Aside from this early divergence, the overall trends appear visually aligned, supporting the hypothesis that higher reward variance is associated with stronger gradient signals in the critic—and, by extension, improved learning dynamics.

Fig 5: Reward variance throughout the train averaged for the 15 trial of the best methods.

When comparing the plots of critic and actor gradient magnitudes, we observe that the actor’s gradients appear to be a scaled-down version of the critic’s, following a similar overall shape and progression. While we currently lack more rigorous quantitative evidence, this visual similarity allows us to postulate an indirect correlation between reward variance and the actor’s gradients—mediated by the critic network. Since the actor in SAC is updated through gradients propagated from the critic, it is reasonable to assume that any improvements in the critic’s learning signal—driven by increased reward variance—can in turn facilitate stronger and more informative gradient updates for the actor.

Conclusions

In this post, we introduced the issue of vanishing gradients, a phenomenon that was compromising the statistical evaluation of SAC-based algorithms. We identified both general causes of vanishing gradients—such as problematic activation functions and initialization schemes—and reinforcement learning-specific factors, such as low reward variance and poor exploration. Based on theoretical considerations, we proposed a set of targeted modifications to address these issues.

Our statistical analysis revealed that the most effective strategies for mitigating vanishing gradients were: (1) increasing the entropy coefficient, and (2) reducing the magnitude of the weights in the actor’s final layer. Notably, both of these are domain-specific solutions rooted in RL principles. As for Leaky ReLU, while it did not significantly improve gradient magnitudes in our experiments, it also did not harm the learning process. Moreover, its widespread empirical validation in the literature and its ability to alleviate issues inherent to standard ReLU activations—such as the “dying ReLU” problem—make it a safe and theoretically grounded choice.

While some of the statistical analyses were limited by computational constraints, we believe the experiments provided sufficient insight to guide more comprehensive evaluations in future work.

Finally, this post marks the first use of our newly implemented statistical evaluation framework. We found that this framework was instrumental in drawing data-driven conclusions, helping us move beyond intuition and anecdotal evidence. It significantly improved our ability to interpret results with clarity and confidence.