Simulation Tools

The choice of simulator for Reinforcement Learning is not trivial. There are multiple characteristics each simulator possess that could influence this choice, and some details are far to complex to understand for someone who is merely starting. The optimal strategy seems to be chosen a simulator based on the following characteristics: Integration with Reinforcement Learning Frameworks, Support for Various Sensor Modalities, Open-Source, Benchmarking Tools and Documentation and Community Support.

These topics are not usually the focus when deciding for a simulator, however, they make sense in the alignment of my PhD thesis. Firstly, the most used simulators have more or less good physics engines, and understanding which one is the best in this metric is difficult. Therefore, by analyzing the community support, I may be choosing the best ones. Secondly, the simulators being open-source, with various sensor modalities, support parallelization and with benchmarking tools are characteristics that promise to expedite my research, because I can more easily use resources created by others. Lastly, choosing simulators with integration with reinforcement learning frameworks will be useful in the first steps of research. Reinforcement Learning algorithms normally require specific data and behaviors from the simulator that other fields do not need. Therefore, finding a tool that already implements it will save a lot of setup time.

At the end of this analysis, I’ll end up choosing to start my research using MuJoCo with Farama’s Gymnasium. Given the nature of this choice, it’s advised to revise this decision in one year time. In that time, my understanding of robotics and simulators should have improved significantly.

Technical Properties

In searching for simulators to use specifically with Reinforcement Learning, I found two articles:

#1 “Simulation tools for model-based robotics: Comparison of Bullet, Havok, MuJoCo, ODE and PhysX”, 2015

#2 “Comparing Popular Simulation Environments in the Scope of Robotics and Reinforcement Learning”, 2021

The idea I’ve got by analysis this papers (and some forums online) is that there are a lot of valid options to train our RL algorithms in robots, such as MuJoCo, Gazebo, PyBullet and Webots.

In the papers, the authors can’t explicitly tell which simulator is better regarding physics simulation, because various simulators outperformed the others in the variety of metrics tested. The discussion of the authors always seemed to be centered in simulation speed vs accuracy. In Deep Reinforcement Learning, simulation speed is very important, because a fast collection of data can help to mitigate high variance of the RL algorithms. However, to transfer the learned policies to the real world, it is necessary for the simulation to be the more accurate possible.

In the end, the authors conclude that MuJoCo is not the most accurate simulator, but recognize it’s specialization to the Reinforcement Learning paradigm. MuJoCo also tends to be faster. Pybullet and Webots presented better results, and a quick search shows that some small communities are using it for RL. Lastly, the 2021 paper gives a significant weight to Gazebo for its ROS integration. Quoting the authors: “Gazebo would be preferred if one plans on developing not only for simulation but also for real systems”. Given all of this information, one question remains: Is it the focus artificial intelligence or real-world transference? If the real-world transference is the focus, then an accurate simulator and ROS should be included. However, if the study of artificial intelligence is the priority, then the simulation speed has a heavier height in the decision.

In conclusion, given the reasons listed before, for the technical point of view it seems that MuJoCo is the most appropriate simulator to start exploring Reinforcement Learning algorithms in Robotics.

The Reinforcement Learning Community

In terms of Reinforcement Learning community, one of the most important elements seems to be OpenAI with the creation of Gym, in 2016. The appeal of Gym was that implemented a standardized way of programming RL environments. It also came with benchmark 2D environments (Atari games, cart pole, mountain car, …), as well as serving as a API for more complex simulation environments (MuJoCo). The OpenAI members even created a well documented tutorial called Spinning Up, which not only teaches how to install and use Gym, but also tries to introduce some well known Deep Reinforcement Learning algorithms theory. The main simulator implemented by OpenAI was MuJoCo; however others tried to implement the Gym philology with other tools:

- Gazebo gym-gazebo gym-gazebo2 gym-gazebo gym-ignition(IIT)

- Webots gym-webots

- Pybullet gym-pybullet-drones pybullet-gym Pybullet_Gym_Tutorial

This enumeration of GitHub repositories serves to show that the way to program an RL environment was greatly accepted by the community. Even the Istituto Italiano di Tecnologia has a Gym-based implementation of Gazebo. Despite all of this, Gym stopped receiving maintenance in 2021 (as show in their GitHub repository). This led to all of the repositories above to be archived and/or to stop receiving maintenance, which make them loose their appeal.

So, if Gym is not a viable option, why would I spend two paragraphs talking about it? Well, because Gym was not totally deprecated, but suffered more of a rebranding. The team that maintained Gym switched to a project called Gymnasium. This new toolkit is a fork of OpenAI’s Gym, and it is managed by Farama Foundation. The Farama Foundation is a 501c3 nonprofit organization dedicated to advancing the field of reinforcement learning through promoting better standardization and open source tooling for both researchers and industry.

Farama’s crew (as OpenAI) also implemented the Gymnasium API in MuJoCo. Other simulators seem to have few implementations (or none) from the community, because Gymnasium is fairly recent. However, given that the community is used to the structure of Gymnasium, it may be a matter of time to see the other simulators as well. Despite that, MuJoCo may be the only stable and well maintained implementation using Gymnasium.

Why Follow Farama Foundation?

After analyzing the Farama Foundation GitHub account and their web page, its clear that I should try to use their resources as much as possible. Firstly, they’re a group focused on making available tools for Reinforcement Learning research. Secondly, they have a large team maintaining the source code daily. Thirdly, by continuing the work of OpenAI’s Gym, they surely will inherit the community around it. Lastly, by looking at the GitHub repositories under their account, it seems that they are working in topics that will greatly interest me, such as:

- Gymnasium(already mentioned): A standard API for single-agent reinforcement learning environments, with popular reference environments and related utilities (formerly Gym).

- PettingZoo: A standard API for multi-agent reinforcement learning environments, with popular reference environments and related utilities.

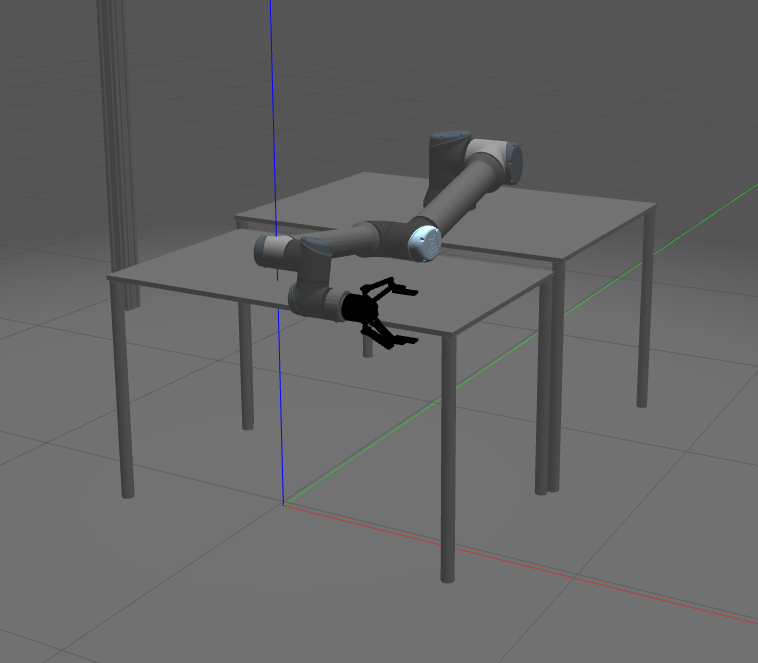

- Gymnasium-Robotics: A collection of robotics simulation environments for reinforcement learning.

- MO-Gymnasium (for Multi-Tasking): Multi-objective Gymnasium environments for reinforcement learning.

- Metaworld: Collections of robotics environments geared towards benchmarking multi-task and meta reinforcement learning.

In their GitHub account they have more projects linked to Reinforcement Learning (which I encourage to see), however these were the ones more close to my PhD research.

Final Choice

As it was mentioned in the introduction, the simulator of choice will be MuJoCo, at least for an year. The decision is supported by the fact that MuJoCo is a well established simulator (with decent accuracy), with a well-developed and documented Reinforcement Learning Integrated Framework (Gymnasium). It also checks out the requirements for being open-source, support various sensor modalities and possess a lot of benchmarks to test RL algorithms.

I am also choosing MuJoCo in the basis that transferring the trained models to real hardware is not the focus here. In fact, real-world transfer come to my 3rd year of research, and should not delay the progress of these first steps. Also, there are some other facts that might indicate that this choice is compatible with future decisions:

- Despite MuJoCo defining the robots with its own description file (MJCF), it is documented that the simulator can read URDF. Additionally, there are some URDF-MJCF converters online.

- Using MuJoCo does not block the use of ROS. There are some repositories (including of robotic companies such as Shadow Robot) that implement ROS in MuJoCo: mujoco_ros_pkgs, mujoco_ros_sim, Digit-MuJoCo-ROS2, Spot-MuJoCo-ROS2, Atlas-MuJoCo-ROS2.

Extra

In this discussion about simulators, I purposely left out Nvidia’s Isaac Gym This tool is very recent (launched in 2022) and I could not find feedback from the community of usability, only eagerness to start using. Isaac Gym offers a high performance learning platform to train policies for wide variety of robotics tasks directly on GPU. Isaac Gym is a simulation environment based on Omniverse but geared towards reinforcement learning research. Although this tool was not considered for now, I think it is important to keep it under the radar!